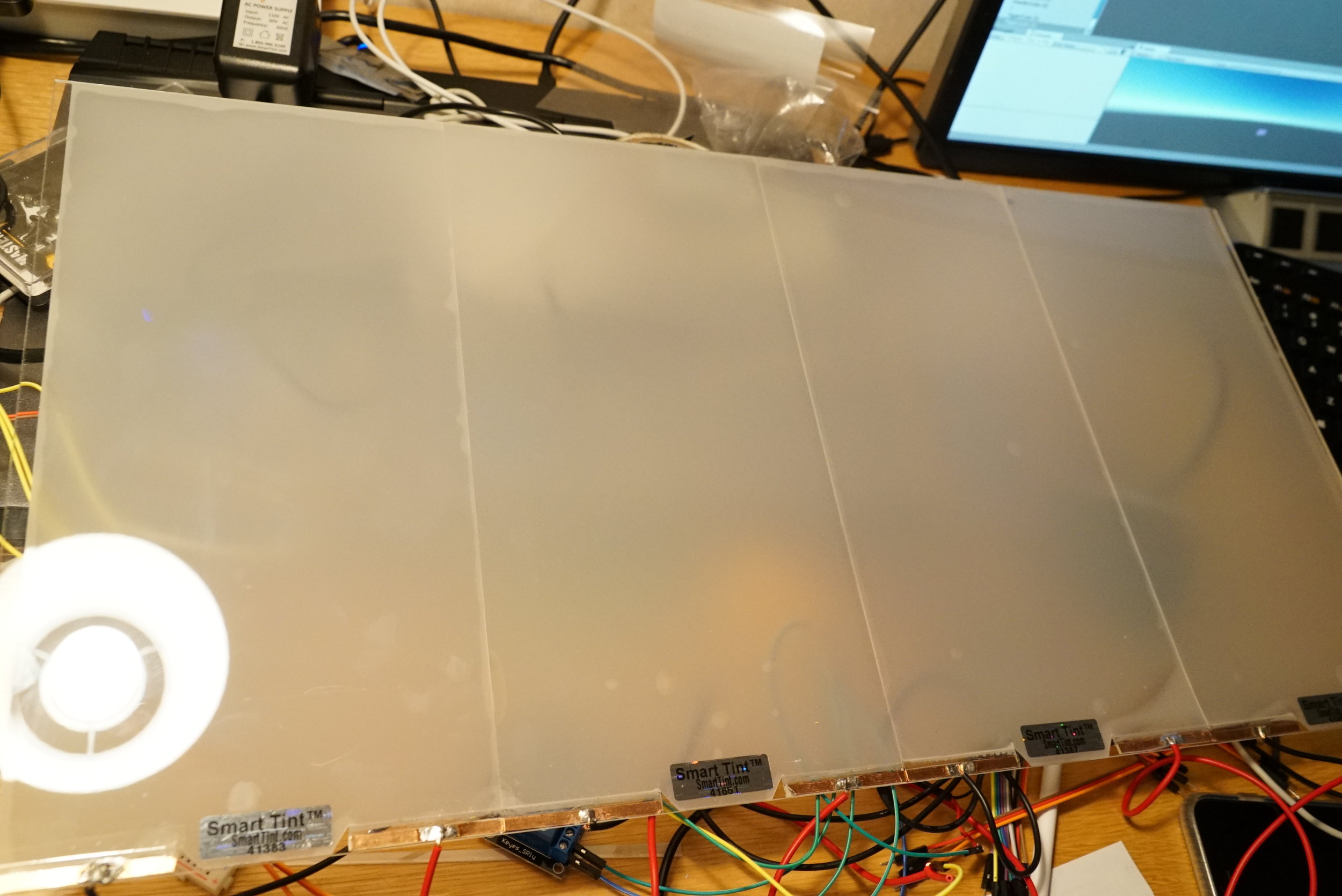

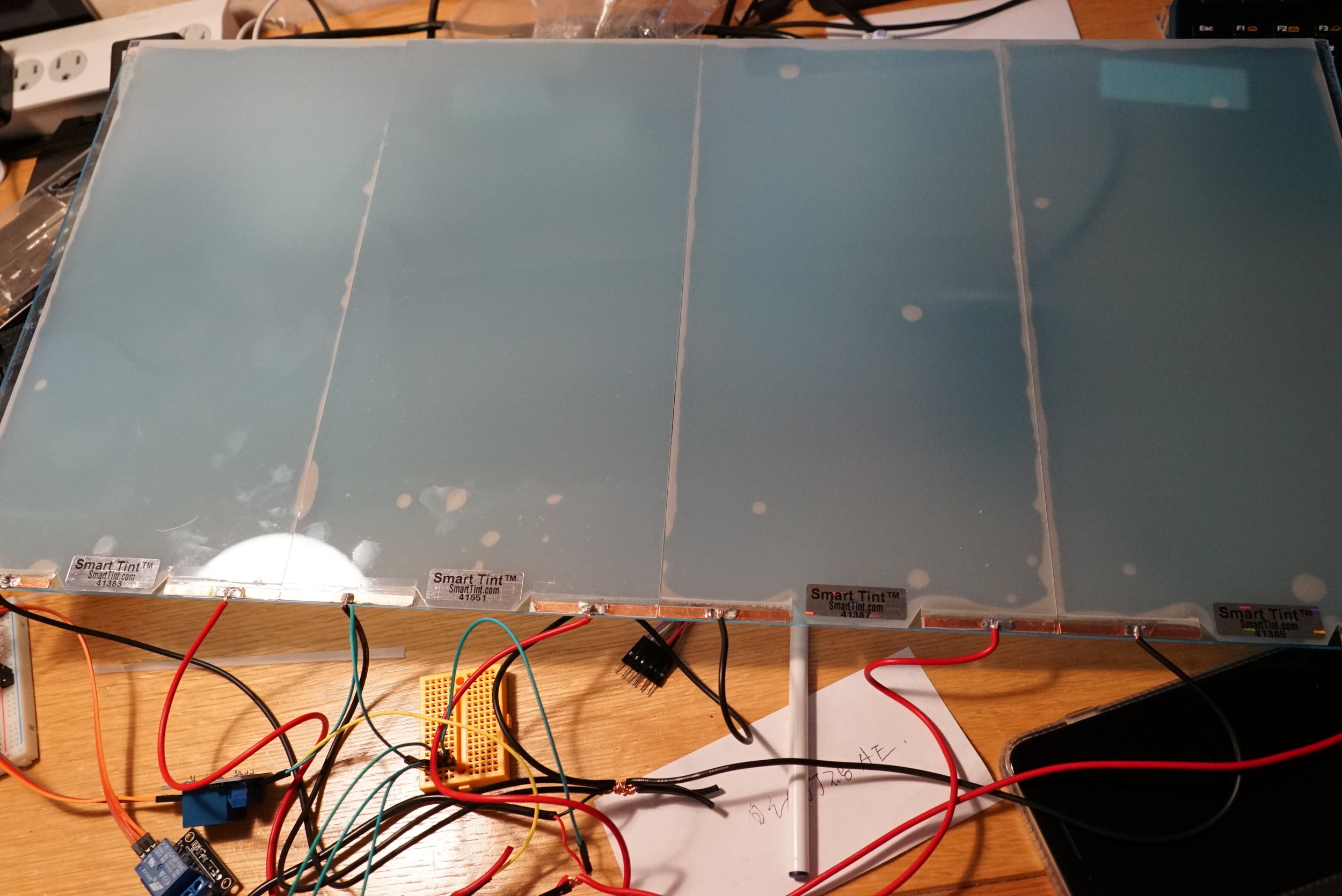

Demo Reel of 5 apps running on the new WindowLightScreen

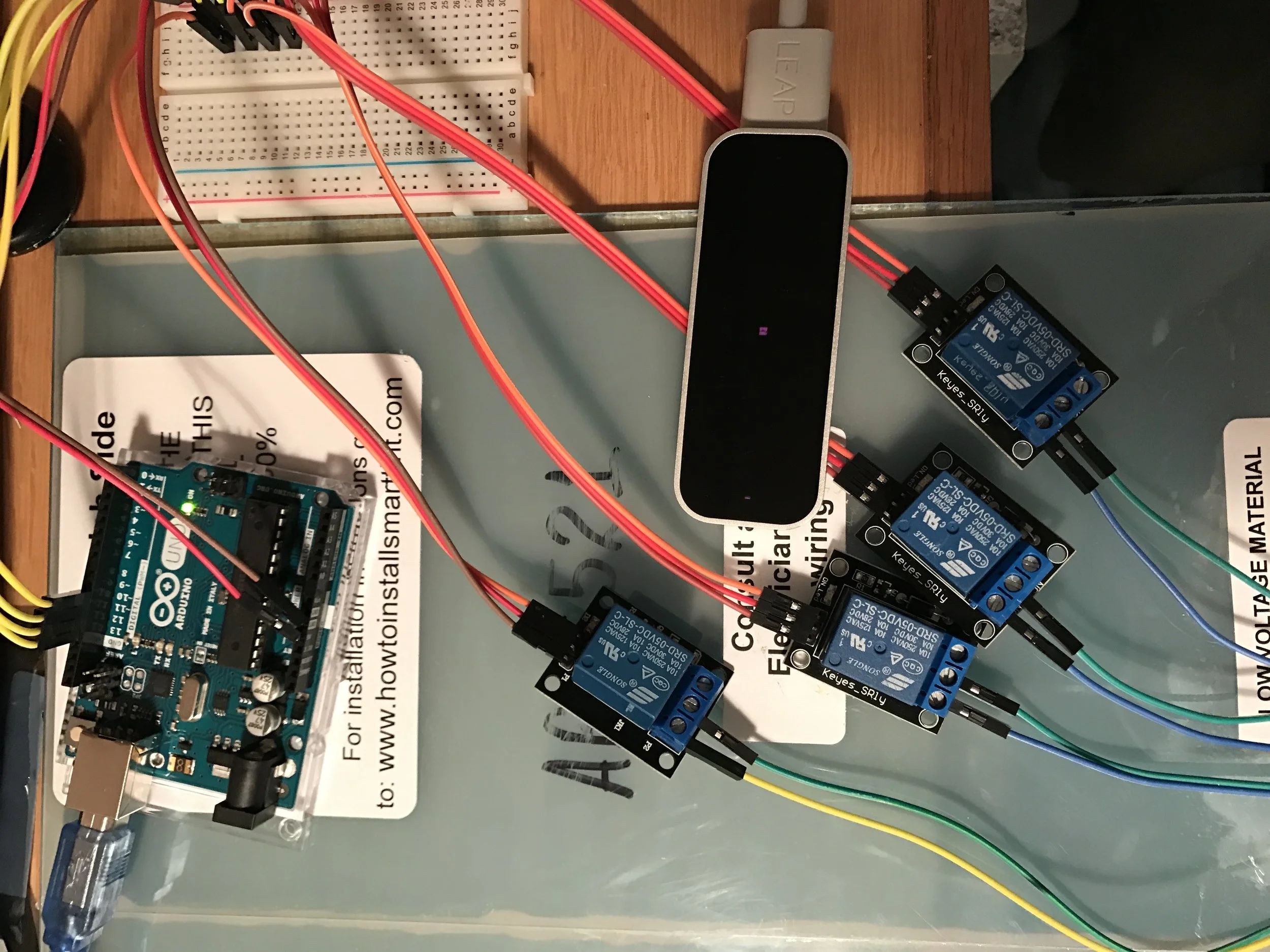

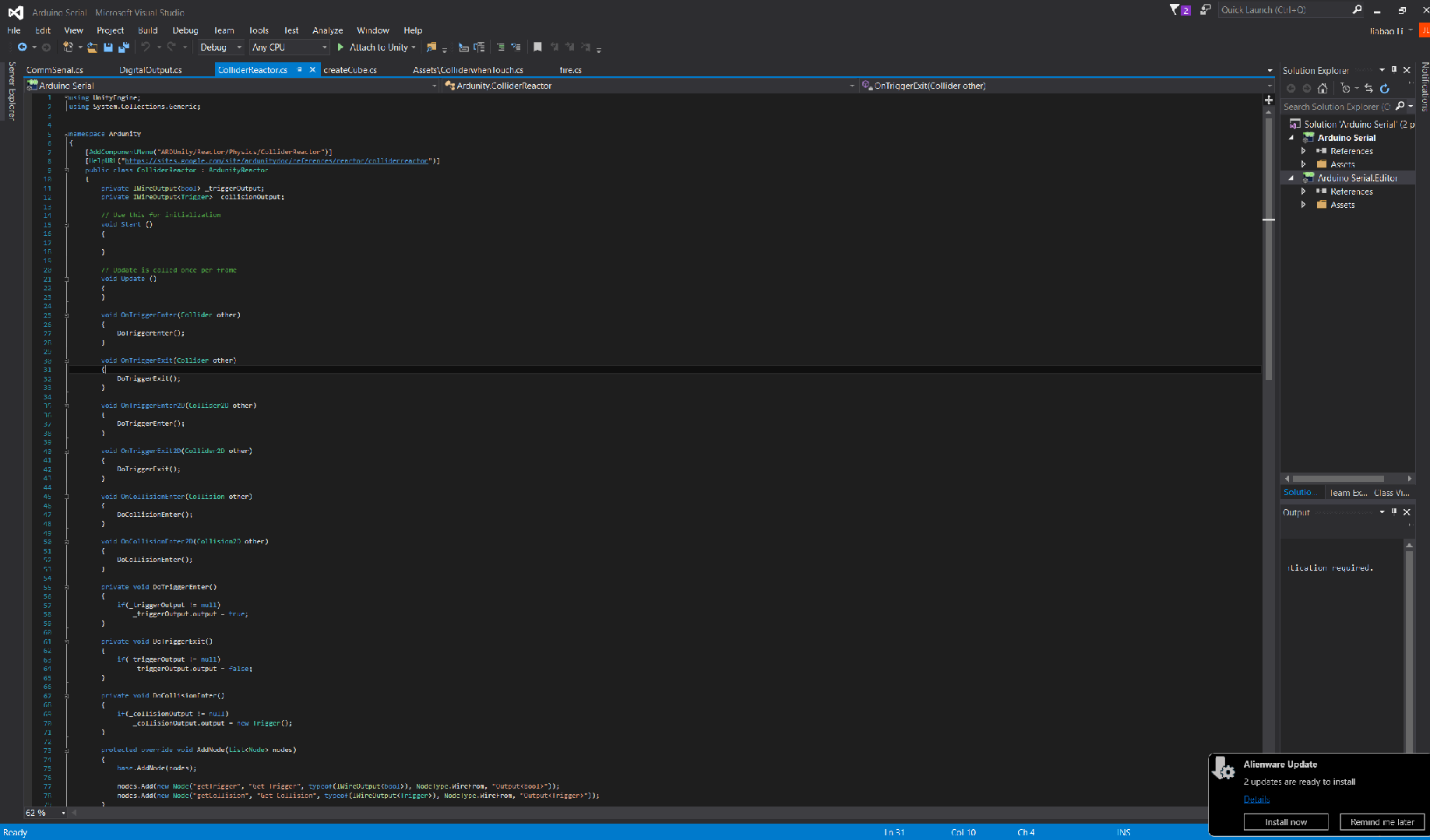

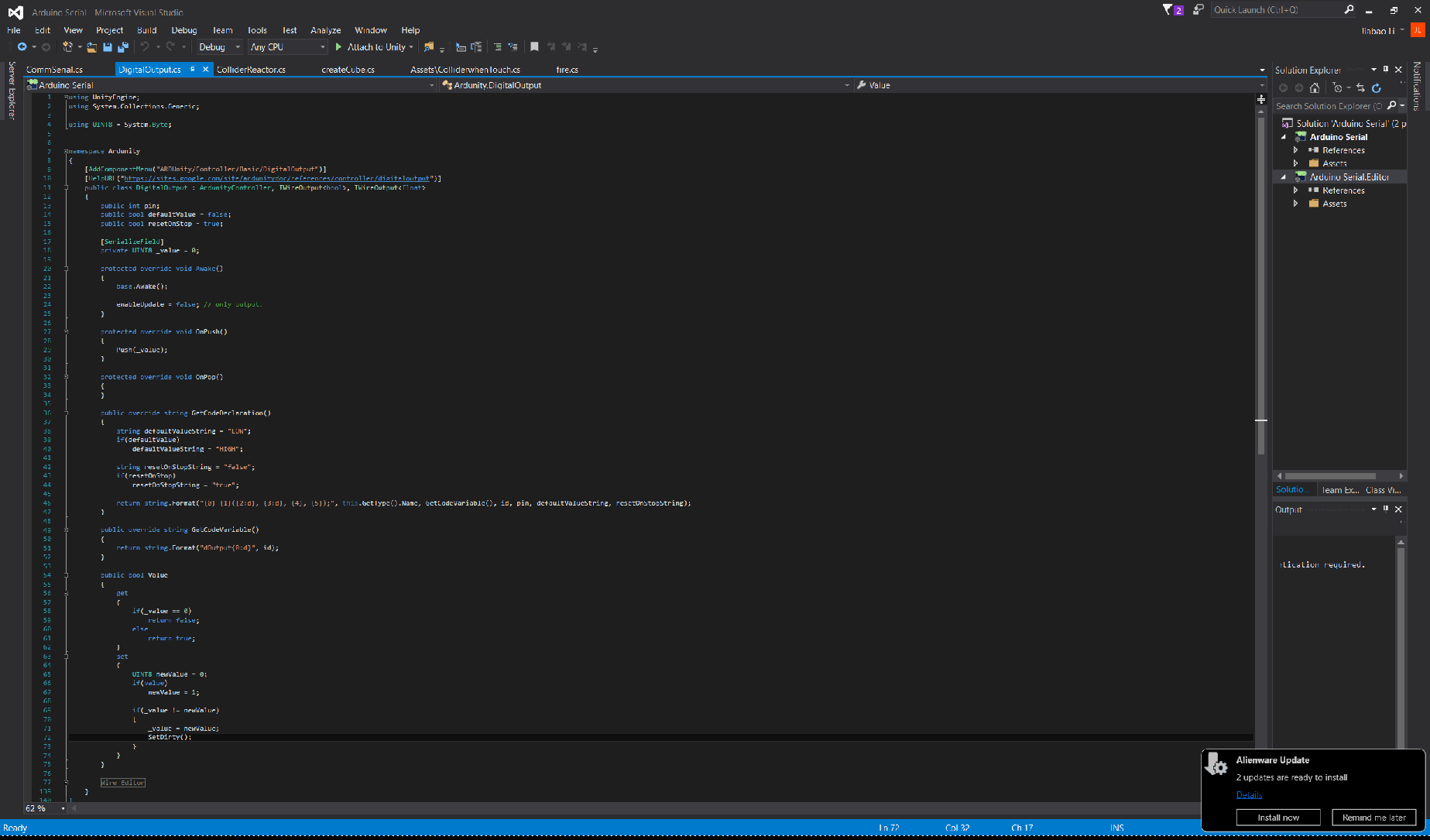

About the input, it ideally should be machined learned people's behavior prediction trained by data captured by the sensors on the infrastructure, which I showed in the last post.

5 APPS: 1. Transform any glass wall to Light/Window/Screen. 2. Transform any Windows to Screens/Light. 3.Future Spotify/Pandora App on this Platform 4. Future Sleep/Focus App on this platform 5. Future Shower App on this platform.

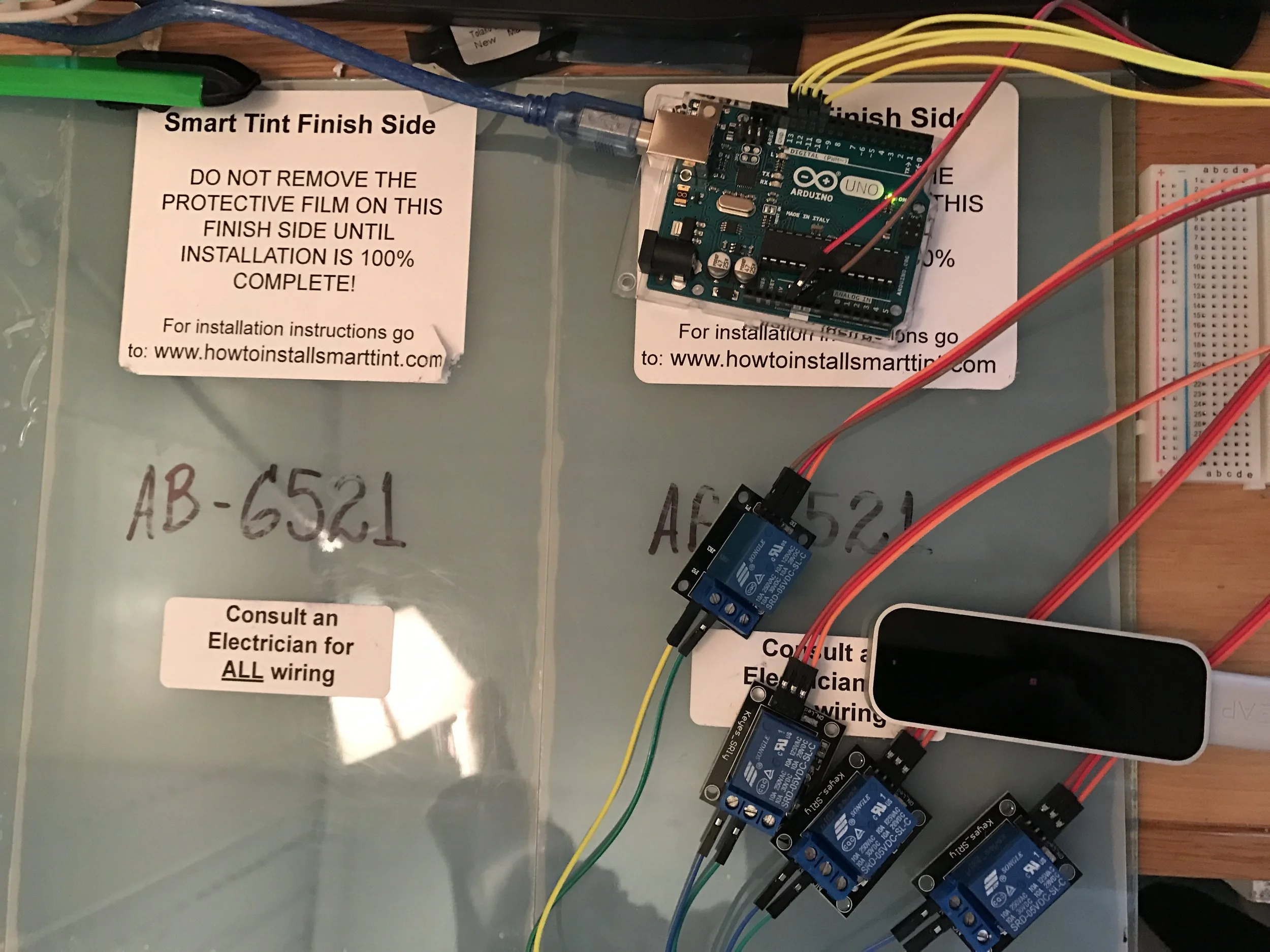

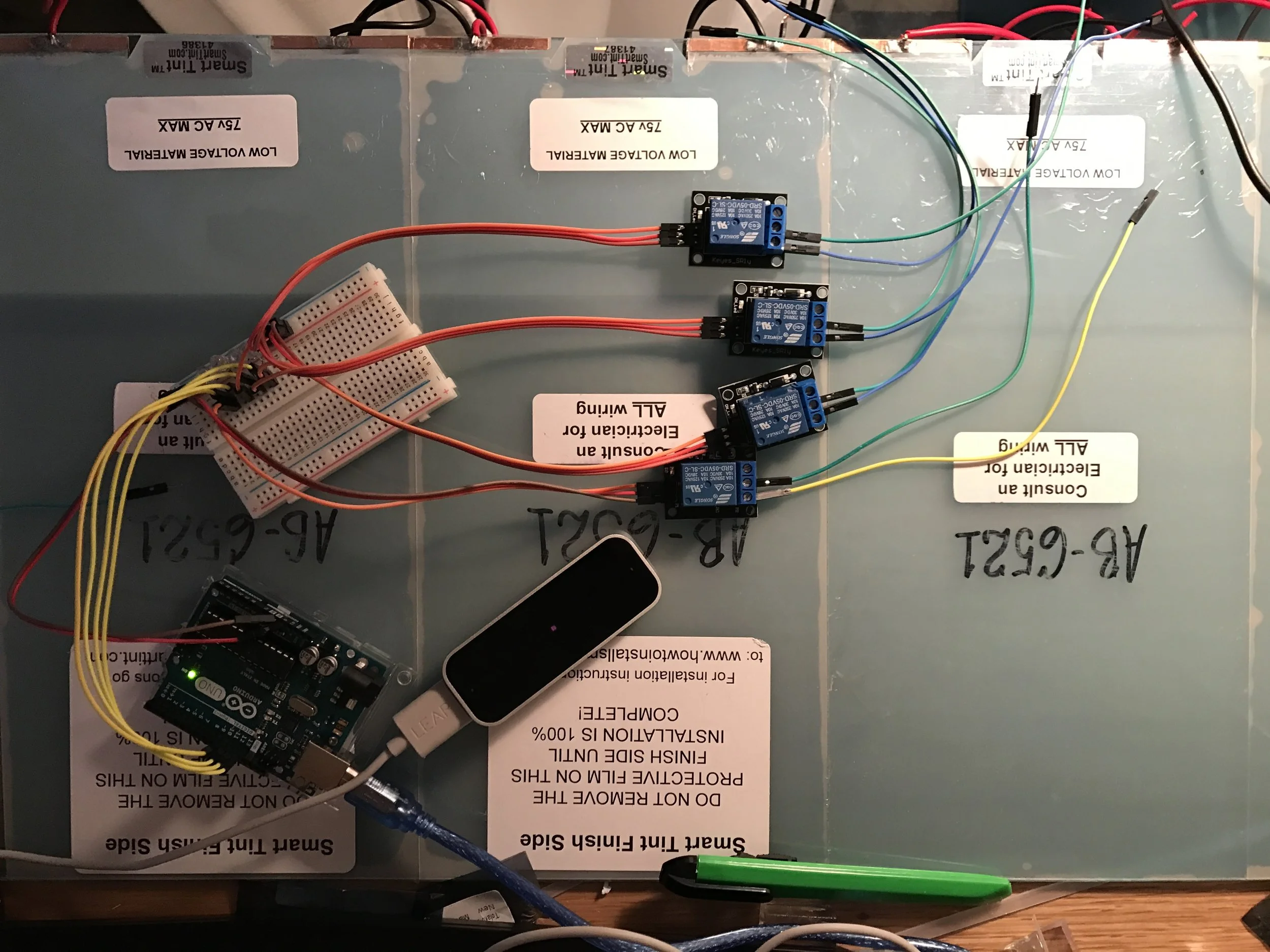

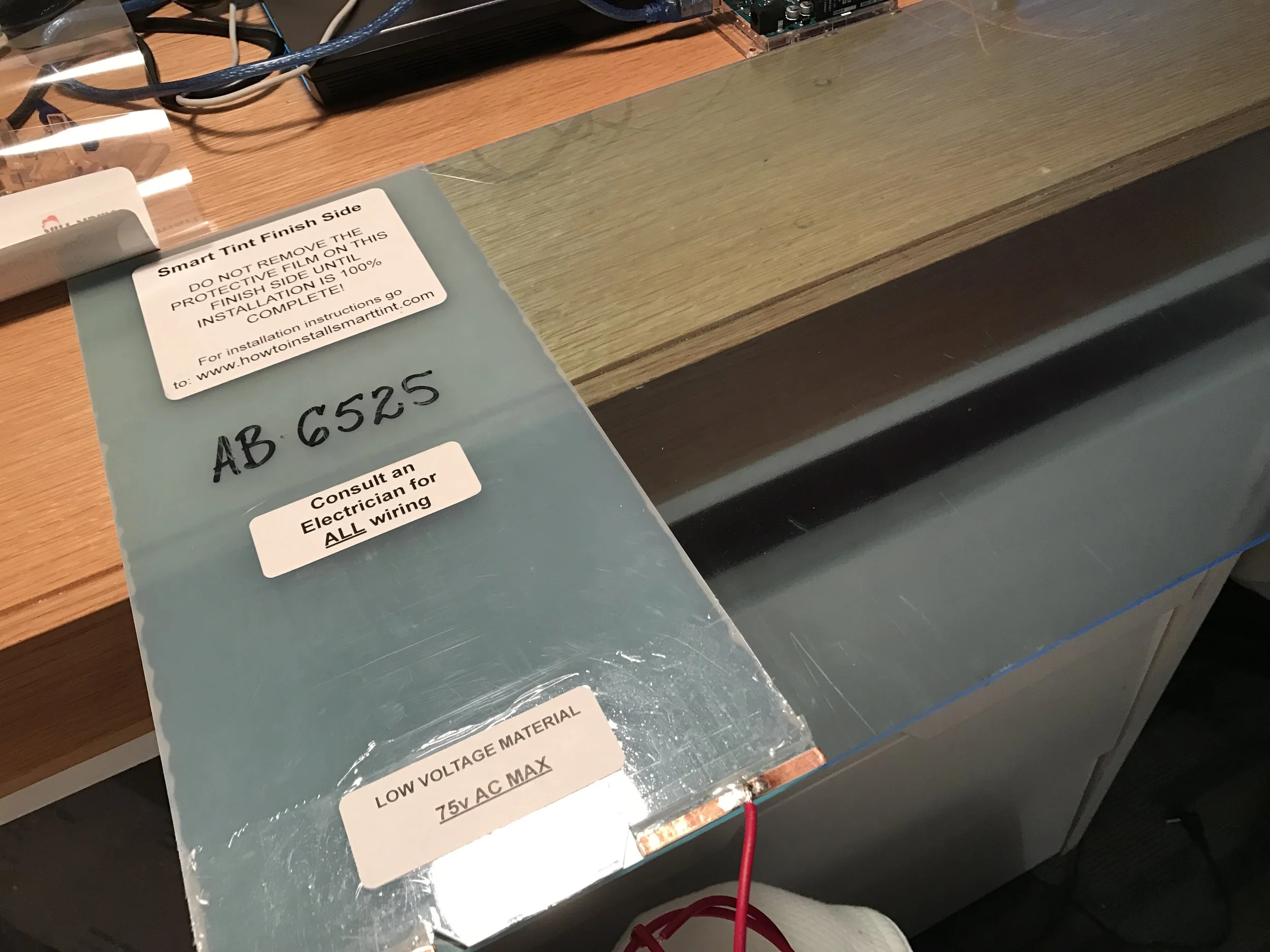

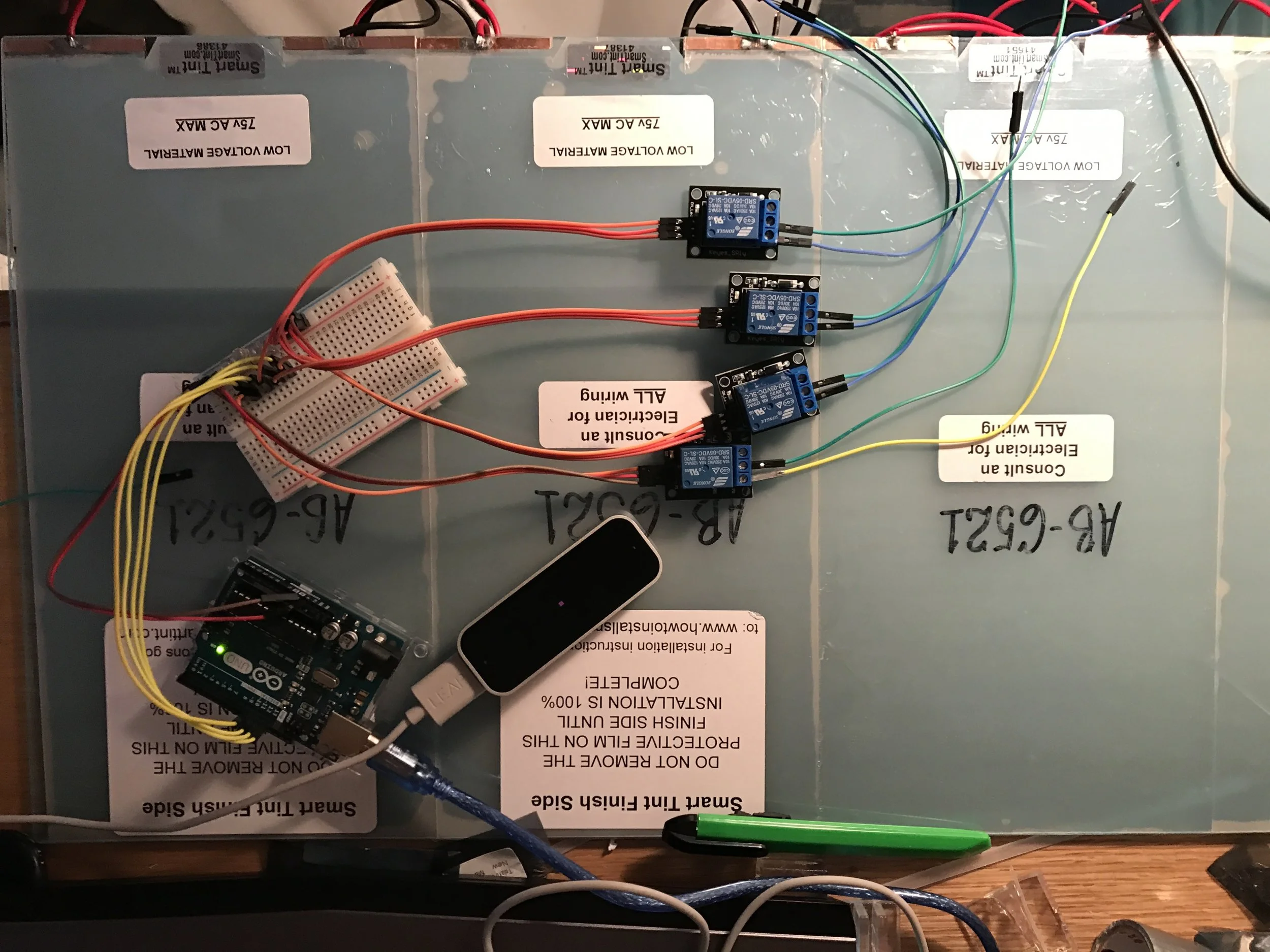

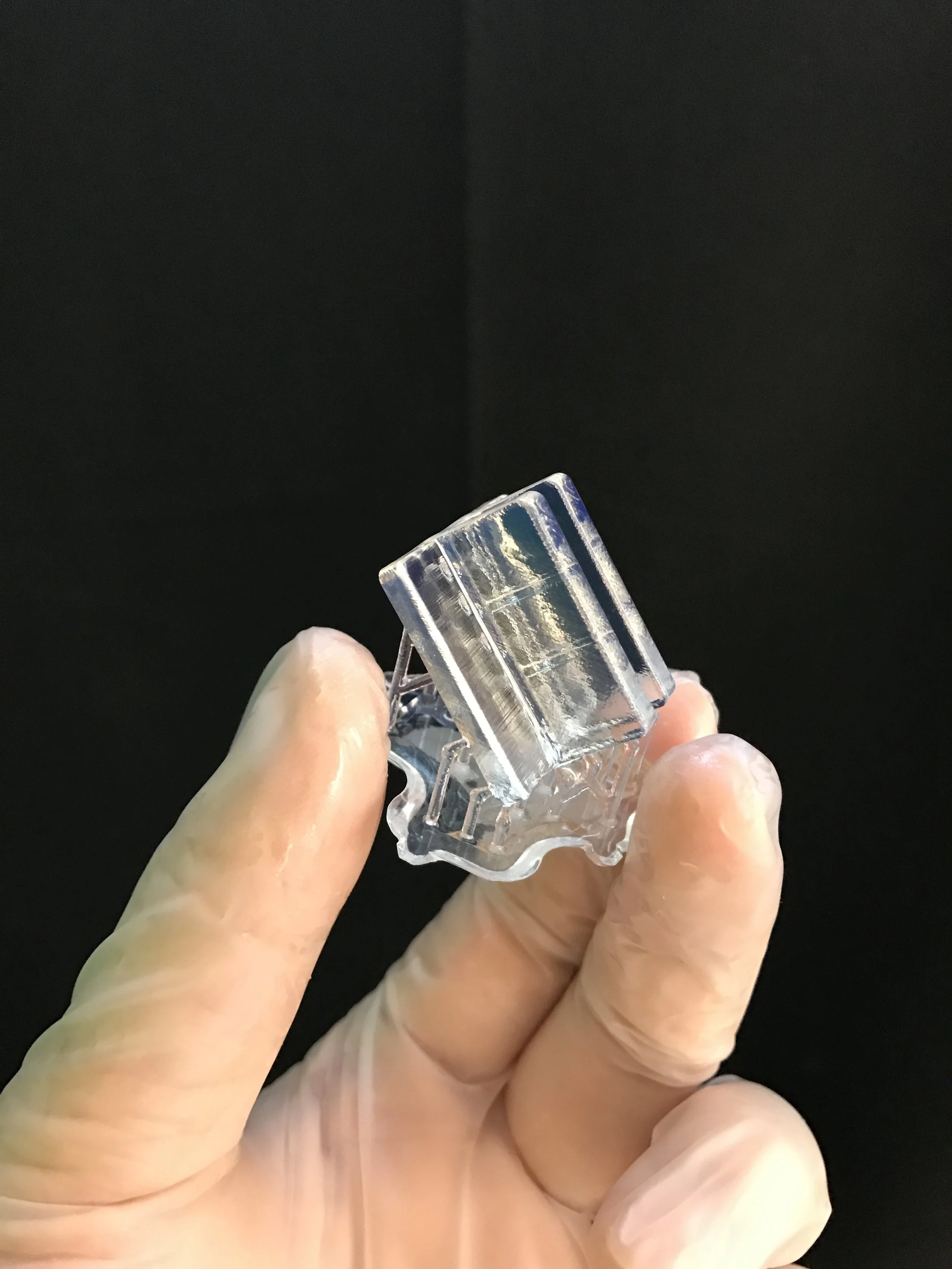

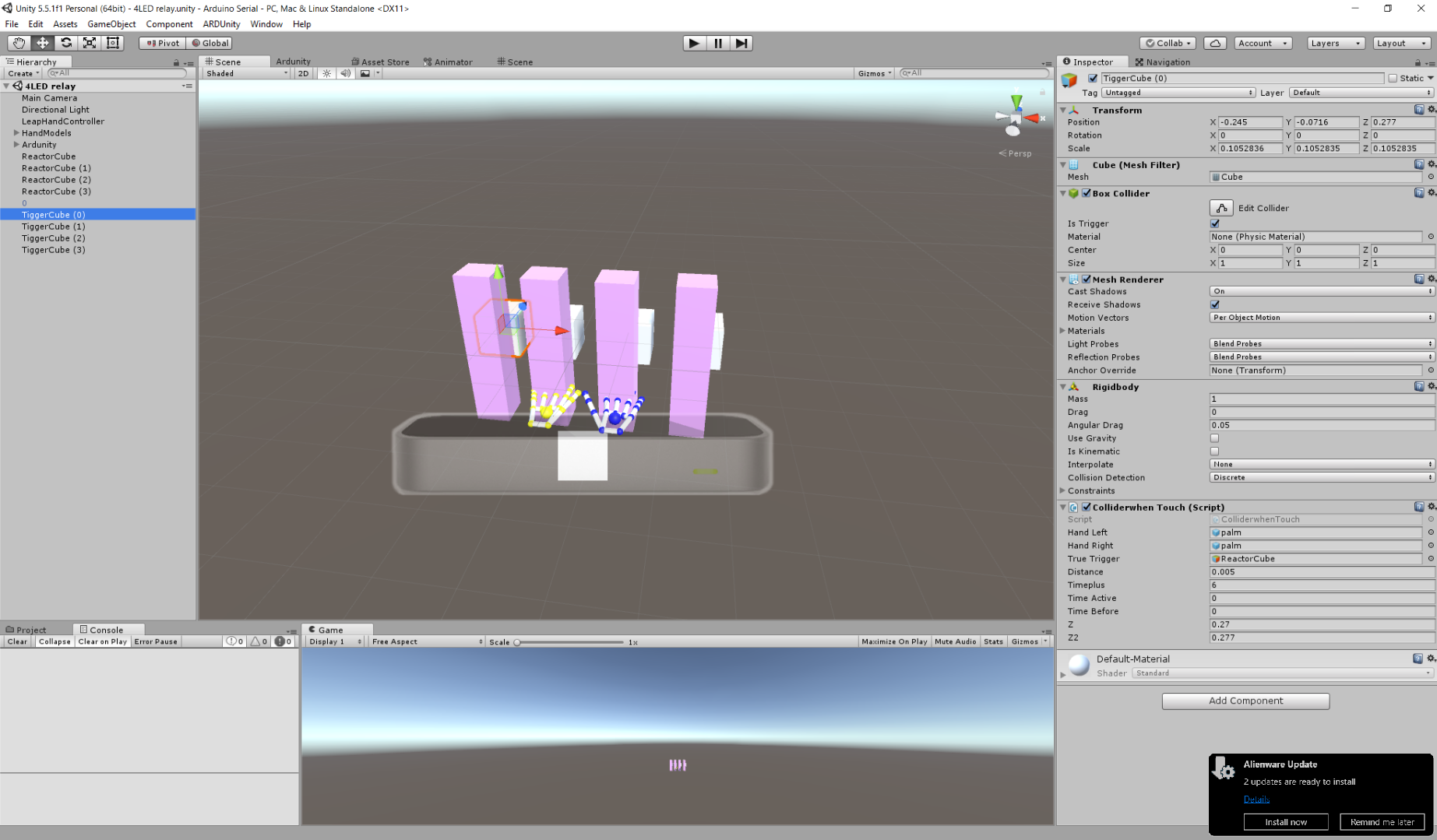

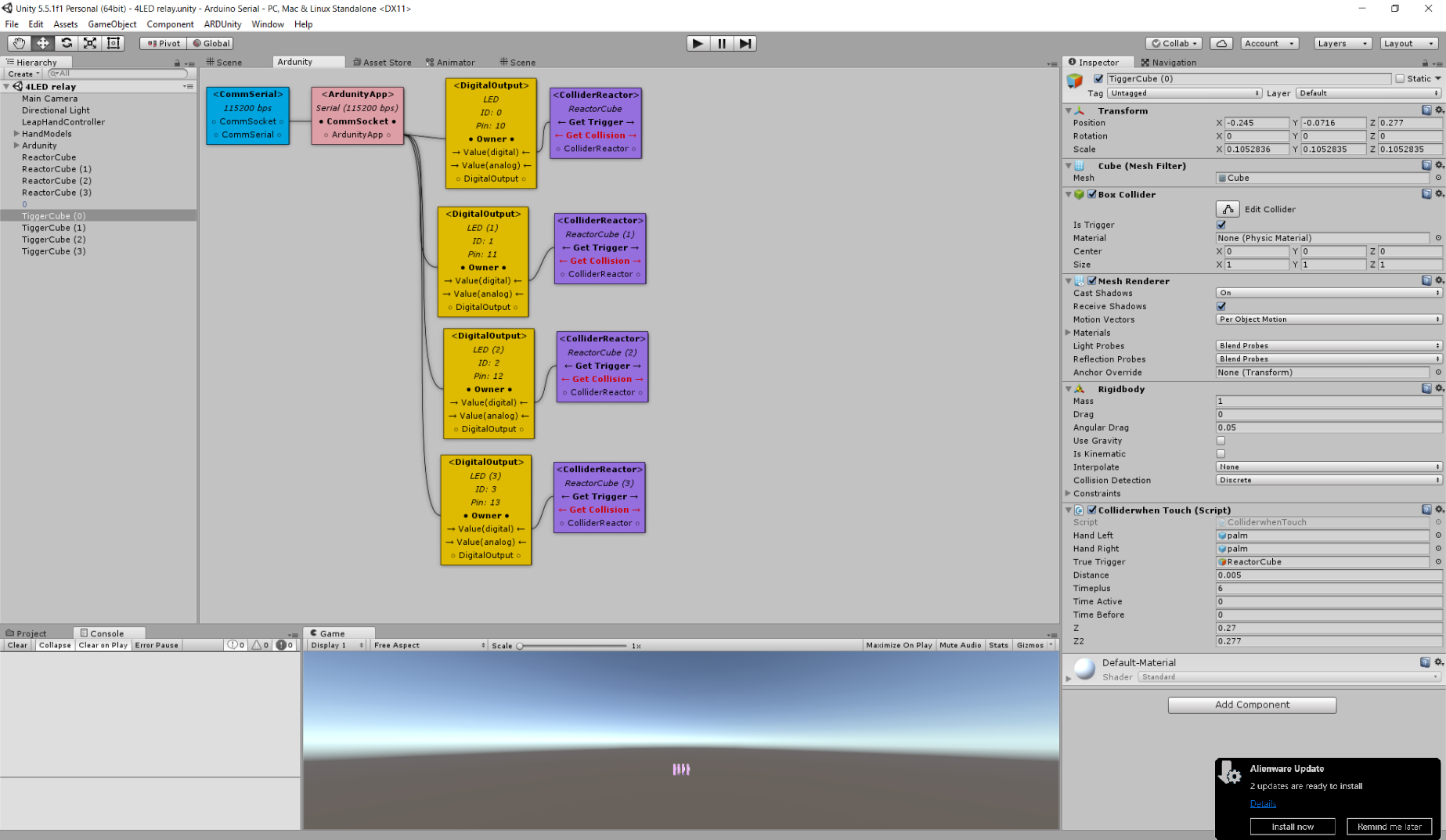

The prototype itself is a combination unit of Screens/Windows/Lights/Blinds. It is an experiment of merging several home devices into a single powerful unit/platform with scalability. This approach ensures the uniform of the platform and allows maximum freedom on developing new applications with multiple functions on the same unit. Corporations today are building protocols with different organizations to connect hundreds of different products into a family. However, it still can't solve the problem of enormous customization for each new app, for the number of different devices one household could have makes them very likely to be unique to the others. In this case, even combining all smart devices in the single home as one system, it will be smartphones of different "versions" (some missing gyroscope, some missing touch screen, etc.) for individual cases. So I think another possibility is that all the devices should be a strongly-bonded small family at first place, which are powerful units integrating multiple interfaces/functions at home.